Public Channels

- # 2022-summerschool-introtometagenomics

- # 2023-summerschool-introtometagenomics

- # 2024-acad-aedna-workshop

- # 2024-summerschool-introtometagenomics

- # amdirt-dev

- # analysis-comparison-challenge

- # analysis-reproducibility

- # ancient-metagenomics-labs

- # ancient-microbial-genomics

- # ancient-microbiomes

- # ancientmetagenomedir

- # ancientmetagenomedir-c14-extension

- # authentication-standards

- # benchmark-datasets

- # classifier-committee

- # datasharing

- # de-novo-assembly

- # dir-environmental

- # dir-host-metagenome

- # dir-single-genome

- # eaa-2024-rome

- # early-career-funding-opportunities

- # espaamñol

- # events

- # general

- # it-crowd

- # jobs

- # lab-community

- # lactobacillaceae-spaam4

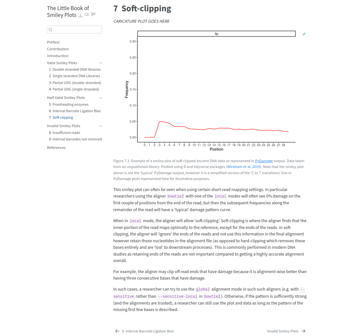

- # little-book-smiley-plots

- # microbial-genomics

- # minas-environmental

- # minas-metadata-standards

- # minas-microbiome

- # minas-pathogen

- # no-stupid-questions

- # papers

- # random

- # sampling

- # scr-protocol

- # seda-dna

- # spaam-across-the-pond

- # spaam-bingo

- # spaam-blog

- # spaam-ethics

- # spaam-pets

- # spaam-turkish

- # spaam-tv

- # spaam2-open

- # spaam3-open

- # spaam4-open

- # spaam5-open

- # spaam5-organizers

- # spaamfic

- # spaamghetti

- # spaamtisch

- # wetlab_protocols

Private Channels

Direct Messages

Group Direct Messages

@James Fellows Yates has joined the channel

@Meriam Guellil has joined the channel

@Gunnar Neumann has joined the channel

@Maria Lopopolo has joined the channel

@Claudio Ottoni has joined the channel

@channel what sort of (no stupid) questions would you be interested in covering if there was an ancient metageomics summer school?

Databases! How to choose, download, build

Anything else (because noone has an answer for choose 😆) ?

For sure, how quick do you need them or can I just post them here as they arrive in my brain

How to authenticate your species hits for dummies. Is a match really a match?

*Thread Reply:* authenticate what?

*Thread Reply:* Species hits, i’ll edit

I am working on trying to pull together information I learnt last week, planning for just personal use, but something more “formal” might be more helpful for a wider group

The reason why I ask is in my new position I have to set up a summer school (part of the grant its funded by)

So this will actually be a thing at some point, but need to work out content

Once I get results from EAGER and have no idea what they mean, I'll also have some "not stupid" questions 😬

Hi everyone! I’m wondering if people have preferences for variant calling tools for ancient pathogens. Never done this before!

1) If you want to use MultiVCFAnalyzer (e.g., to visualise cross-mapping) you must use UnifiedGenotyper 3.5 with ploidy set 2 2) Otherwise, AFAIK there isn't one in particular. FreeBayes is popular though

*Thread Reply:* I’ve been using freebayes. It’s very easy to use. I’ve played with the parameters to try to mimic the emit all sites option of UnifiedGenotyper (ie force it to be a genotype caller) so that I could use the vcfs with multivcfanalyzer or MUSIAL, but I haven’t been successful. I’m happy to share all of my scripts if you want.

*Thread Reply:* Yeah, you need a very highly specific format of your VCF files exactly as it comes out of GATK3.5 🙄

@Kelly Blevins you could also post on the MVA github page to put pressure on Alexander to find and properly release the VCF format agnostic version 😉

*Thread Reply:* @Kelly Blevins, that would be incredible! Thank you so much! I’ve never used a variant caller before so I’m starting from complete scratch here

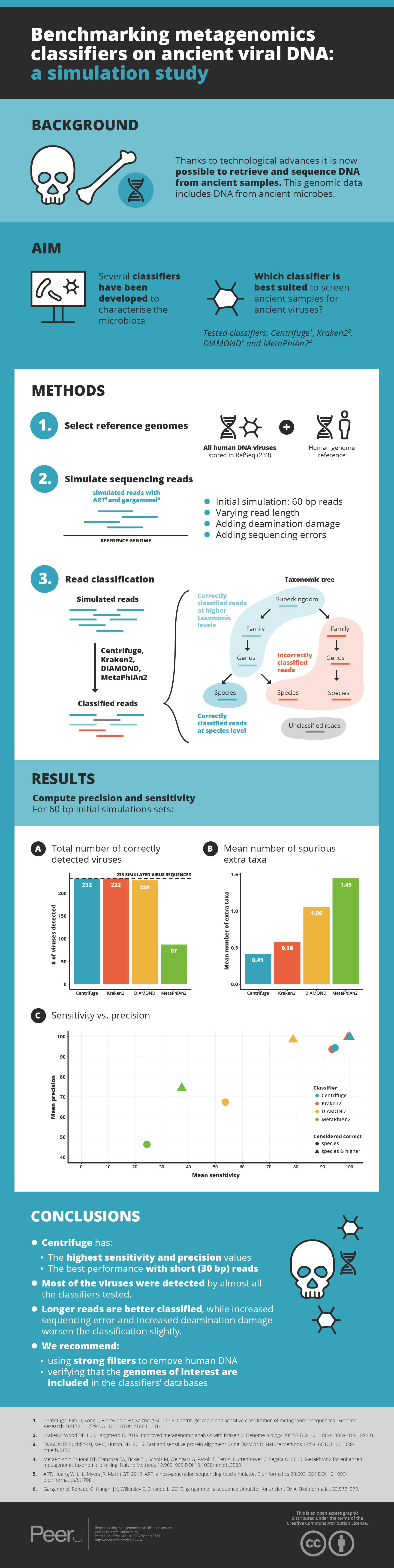

Hi everybody, would be great if someone could help me. I am trying to classify test reads (generated from RefSeq viral sequences) with MALT (latest version) using as db the RefSeq viruses. Unexpectedly, I get some misclassification outside of the superkingdom Viruses, namely within Bacteria, Archaea and Eukaryota. Does someone can give me a hint, why I get a classification ‘outside’ of the database?

What exactly do you mean by 'refseq viruses'?

What has gone into your database?

Hi James, thank you for the quick reply. That is how I build the db:

wget <ftp://ftp.ncbi.nlm.nih.gov/refseq/release/viral/viral.1.1.genomic.fna.gz>

wget <ftp://ftp.ncbi.nlm.nih.gov/refseq/release/viral/viral.2.1.genomic.fna.gz>

wget <ftp://ftp.ncbi.nlm.nih.gov/refseq/release/viral/viral.3.1.genomic.fna.gz>

wget <a href="http://ftp.ncbi.nlm.nih.gov/pub/taxonomy/accession2taxid/nucl_wgs.accession2taxid.gz">ftp.ncbi.nlm.nih.gov/pub/taxonomy/accession2taxid/nucl_wgs.accession2taxid.gz</a>

malt-build -i **.fna.gz -s DNA --index viral_db --acc2taxa nucl_wgs.accession2taxid.gz

Hm ok. Have you looked at the list of non-virsus to see what they are, whether it's consistent across all samples?

I am slightly suspicious you're using nucl_wgs accession IDs, as that is just raw sequencing data and I guess could contain contamination? On the otherhand that's just the taxonomy, so you shouldn't have those sort of refeference sequences that they could align to, in your DB 🤔

- Not yet, that is the next step on my agenda…

- You are right that this accession ID’s may not be the best choice. But on the other hand since the input sequences are ‘just’ RefSeq sequences, I would have thought that this should be rather stable. Which accession ID’s would you recommend?

What version of MALT are you using?

MALT (version 0.5.3, built 4 Aug 2021)

I think that should still work

I can't remembe off the top of my head the minimum assembly build level a 'genome' needs to be to get to RefSeq ...

Maybe there is some messy WGS stuff in there? I don't know...

Thank you James, I will try it out with the indicated accession table and see if I get more convincing results… Having a messy accession table would explain the observed pattern. Let’s see. I will report it here later. James, thank you for the quick help!!!

It would be nice if someone else could chime in though, I might be talking out of my arse 😬 (@Maxime Borry?)

Hey @Samuel Neuenschwander, At what taxonomic level do you get your assignations outside of the virus clade ?

Hi @Maxime Borry, indeed from the incorrectly classified reads ~6% are outside of the db scope, e.g. not Virsues. The classified rank varies, but is often around the species level.

Interesting, I see two possible explanations: • the accessions IDs of some of these viruses match to their host (eg. human or whatever) • The RefSeq viruses database doesn’t only contain viruses

Yes, I agree. Both points are not so nice…

Does it contain phages? That could explain some cross hits depending on the database building

That is a good point, I have to check. I don’t have the data in front of me, but I also get insects… Thank you @Meriam Guellil & @Maxime Borry!

There could be a lot of insect viruses in the DB, because they’re commonly used in molecular biology as vectors for eukaryotic cells, the so called baculoviruses

Maybe contigs are taxonomic assigned in some cases in the assembly pipeline used by some researchers, and so those contigs pop up, even if the vast majortiy of contigs are from teh virus itself?

(so point 1 of what Maxime said above)

Yes, that makes absolutely sense, if an ‘entire’ db with all taxa would be used. With a virus only db however this should not be possible, if not one or the other input is messy.

I have replaced the accession file (with the one pointed at by James) and then also used other viral sequences to create the MALT db, but more or less with the same result. I am trying to nail down the problem, but first I will go on vacation 😉 Thank you fore the great help @James Fellows Yates@Meriam Guellil@Maxime Borry!

Hi everyone! There’s a discussion going on in my lab about whether or not to sample from a tooth with a pretty large, visible cavity. I’m wondering if people have any thoughts!

*Thread Reply:* Can you share a photo or sketch of what you mean?

*Thread Reply:* We have an ongoing project on bear oral health (@Adrian Forsythe can chip in) and see that sampling from within and closely around cavities produces a very distinct microbial signature, whereas samples from healthy teeth in the mouth with cavities look almost “normal” (e.g. they hardly differ from samples from healthy individuals).

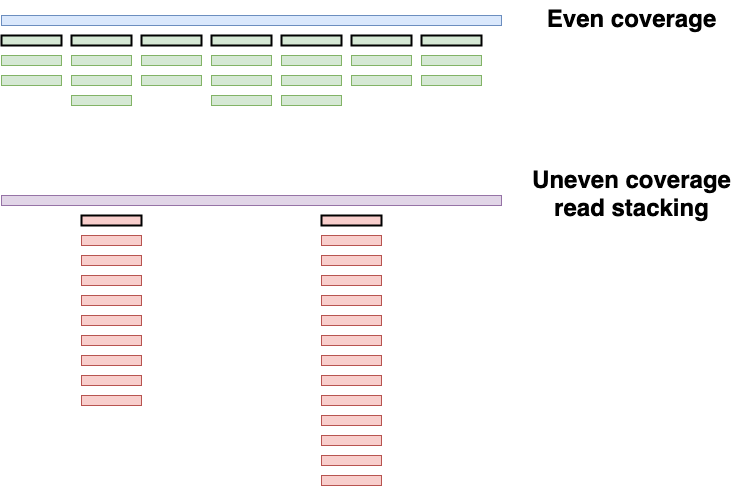

Opinion question for the pathogen peeps: I'm screening samples for pathogens, currently particularly focusing on TB and Leprosy. If you see in MALT 100% identity reads mapping to either M. leprae or M.tuberculosis, and those same reads also map to for example M. avium with one mismatch (~97%), would you trust those reads as M. leprae/M. tuberculosis? 🙃

*Thread Reply:* Calling: @aidanva @Åshild (Ash) @Meriam Guellil @Marcel Keller @Maria Spyrou (off the top of my head)

*Thread Reply:* @Betsy Nelson for TB as well

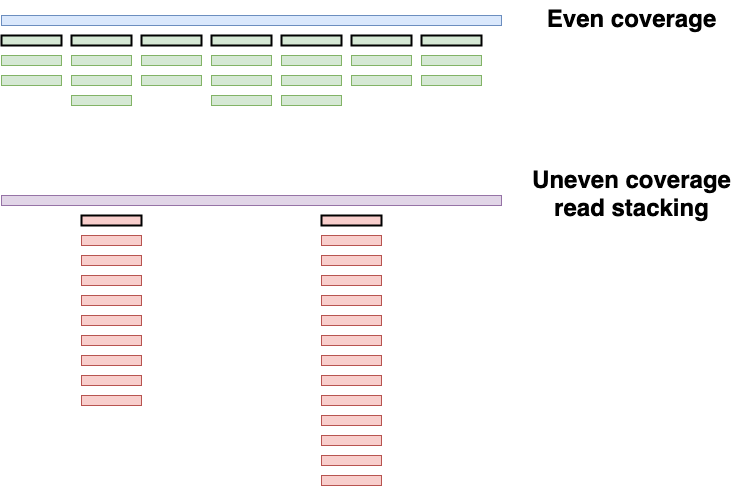

I would say it depends on different factors. How many reads do you have? Are those reads distributed evenly on your reference? Are those reads coming from a low diversity region?

So I guess my point being, it is very hard to do an assessment on a single read

one need to look at different lines to see if what you are seeing is actually the species of interest.

but maybe others can chip in since I have not worked on mycobacterium identification so far

i would map your reads to the references of the various hits, compare the number of hits normalized over the reference length (even in Mycobacterium, genome lengths can vary by quite a bit) and also investigate edit distances to try to get a handle on where the reads ''''truly'''' are coming from. as with most things we do, nothing will be "definitive" but the above should give you a better idea of the affinity of the reads to each of the reference genomes, the coverage across the difference reference genomes and the edit distance plots which look the best (ie steadily declining rather than increasing edit distances to your reference)

Others might suggest doing a competitive mapping within the mycobacterium diversity, but i have not done this myself.

Thanks @aidanva and @Ian Light for your answers! Yes, I've also been looking at the evenness of coverage and edit distance (although I still need to create plots). I think my main problem (and probably everyone else's looking into Mycobacterium species), is trying to see through all the background in the hope there are some very low levels of a true signal... I've been using BWA mapping and MALT so far, and now working on extracting all the reads mapping to the MTBC clade in the MALT results as a way to filter out background noise... Any thoughts on this approach??

I also want to try stricter BWA mapping parameters. I've been using an edit distance (-n) of 0.03 for the screening, but that still picks up very deep clusters/conserved regions with lots of multiallelic sites. But I wonder if there might still be a more random distribution buried underneath these clusters. 🤔

Yeah, Mycobacteria are a harsh one. I think in this regard @Betsy Nelson @Åshild (Ash) @Kelly Blevins can share their experience working with TB

It’s great to have this channel, and I’m happy to contribute where I’m able to. But can we establish as etiquette that questions are only answered with the thread function? This way it’s easier to keep track on what’s going on and one doesn’t get flooded with notifications.

*Thread Reply:* If you are getting bothered a lot, I would highly recommend setting your notifications to mention only:

(right click on the channel name > change notifications)

*Thread Reply:* Then you will be notified when someone wants your specific feedback, but otherwise you can just look in there when you're interested

*Thread Reply:* not bothered a lot, but I would still say for a channel meant for questions and answers (rather than general discussions or announcements) it would help

Most species have their own threshold of how much sequence coverage you need to be able to identify them for sure which depends on a lot of factors and this can sadly vary a lot. If you don't have enough coverage there are a couple of other rabbit holes you can go down (specific regions, competitive mappings, masking of conserved regions, etc) but sometimes you will need to increase the coverage to be sure. Without seeing the data its hard to tell, but I am sure the MTB people have some tips up their sleeves :)

(sorry @Marcel Keller ship has sailed for this question lets do better on the next one 😉)

@Meriam van Os I echo everyone else’s advice - breadth of coverage and edit distance are really important. If you’re picking positives from MALT for downstream investigation though, I have found that the frequency of MTBC summed reads/mycobacteria summed reads (at least .25) predicts successful capture enrichment pretty well. If you’re seeing something that looks like the attached, I would say don’t get your hopes up. How deep are these libraries sequenced? And how old? Most of my experience is from screening ~500 year old remains from central Mexico for MTBC, but I’ve worked with some more recent (200-300 years old) samples from Belgium and Spain that followed the same pattern (intermittent qPCR assay positivity (IS1081, IS610); pileup at mycobacteria node in MALT after shotgun; less than 5% of the MTBC ref genome covered after MTBC capture, where the 5% is pileup at conserved regions).

*Thread Reply:* For example of a weak positive, attached is a screenshot after a MALT screening of a shotgun library. It doesn’t look great, right? A solid chunk of reads could be assigned to the MTBC node, but the majority could not be resolved further. I was able to recover a partial genome (~70% of the genome covered at 1x) from this library. So not enough for analyses, but enough to confirm MTBC and justify making another extract or library.

*Thread Reply:* What database are you using for MALT? I made (a small) one for myobacteria and friends that I’m happy to share.

*Thread Reply:* Hey Kelly, this is super helpful, thank you! We've built a MALT database with ~600 Mycobacterium genomes (MTBC and non-MTBC species). Do you think this should be sufficient? And, so you're saying that in MALT, if about 25% of Mycobacterium reads map to the MTBC, that's a pretty good indicator? I've done capture as well, samples have about 12 -20 million collapsed reads. All of them are showing over 700 MTBC reads with a 100% identity threshold. These samples were selected for their lesions and have tested positive for at least IS1081. Looking at the distribution today, so finger crossed something real is in there!

*Thread Reply:* Yes of course no problem! Yeah, I think that’s plenty. My myco database only has around 500 genomes.

*Thread Reply:* Yep, that’s been a good indicator for me. If at least 25% of the myco summed reads can be assigned to the MTBC, then I have been able to get at least a partial genome from the library after capture. So I would say it’s not a way to authenticate MTBC positivity in a sample but a way to predict a positive capture, if that makes sense. I don’t think we’ve a good enough grasp of myco diversity to authenticate MTBC with just a few reads, even if they’re evenly distributed.

*Thread Reply:* Depending on your capture efficiency, you should be able to get away with sequencing at half that depth. I’ve found that I can sequence at a depth of ~2-3 million reads after Daicel Arbor Biosciences myBaits capture (we send them MTBC genomic DNA and they make the baits and send us the kit https://arborbiosci.com/genomics/targeted-sequencing/mybaits/mybaits-expert/mybaits-expert-wge-whole-genome-enrichment/) and expect to sequence the captured library to saturation.

*Thread Reply:* Good luck! You’re working in the Pacific, right? I REALLY hope you find something 🙏:skintone2:

@Adrian Forsythe has joined the channel

Hello, I have a question. If you want to re-sequence a certain library to reach the desired genome coverage (e.g. 10X), where the targeted genome could be human, microbial, etc., which calculation do you use to estimate how many additional reads/Gbp you will need?

Of course the simple formula would be something like this: Reads to generate = [ GENOME SIZE ** (proportion of unique mapping reads from total raw reads) ** Expected read depth ] / (average read size of uniquely mapping reads)

A second option is to Run preseq, and propagate the curve it estimates. This solution is certainly better than the previous one, as the % of duplicate reads is not linear.

Do you have some other method that outperforms preseq for this? If using preseq, do you use any particular tuning ? Do you have a script to share? (other than the outputs we can get from nf-core/eager)

*Thread Reply:* @Felix Key has a nice little script that parses PreSeq output to give you more precise information: https://github.com/keyfm/shh

*Thread Reply:* However looking at the README it might customised for eager1 😕

*Thread Reply:* So you might have to do some reconstruction

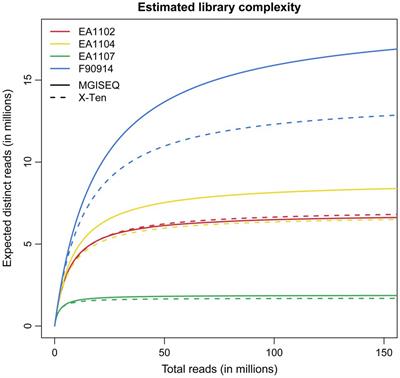

*Thread Reply:* Looks like this:

*Thread Reply:* (CF == cluster factor == duplication ratio)

*Thread Reply:* Still PreSeq though

*Thread Reply:* It looks liek a lot ofnf-core pipelines still use PreSeq actually, so I dunno if there is abetter mehtod

*Thread Reply:* ok, great. Thanks a lot James. I think that building a plot like this will be enough for a first try. We were just discussing today with @Pierre Luisi if in addition to the expected distinct reads curve we could also estimate if the genomic positions covered are evenly distributed along the genome or if there is also some sort of asymptotic curve of the chromosomes/regions/breadth of coverage while increasing the sequencing depth, that does not fully follow the same trend than the preseq curve…

*Thread Reply:* you can also have a look at nonpareil https://github.com/lmrodriguezr/nonpareil

*Thread Reply:* Two caveats about nonpareil:

1) It's more for metagenomics rather than genome coverage for a target genome 2) Only supports uncompressed FASTQ files, which sucks baaaaad

*Thread Reply:* I see. Incredible that there are still tools using uncompressed fastq files out there… I got the impression it was more metagenomics oriented

*Thread Reply:* but it’s good to know it exists

*Thread Reply:* A LOT of metagenomic tools still only accept/produce uncompressed files

*Thread Reply:* It was crazy, Maxime and I went through loads recently. But I think with modern data, you don't need to sequence much...

*Thread Reply:* still, disk space is way too expensive to spare it in something like this

*Thread Reply:* Either the devs have 💰

*Thread Reply:* or just tiny assemblies

Hello everyone :)

I am wondering what the SPAAM community thinks of the different releases of Kraken2. I did my master's project on dental calculus and the first time I run Kraken2 (v. 2.0.8 beta) / Bracken I got almost 10000 taxa identified in the dataset. I recently analyzed (almost) the same dataset again, using Kraken2 v.2.1.1 and only got about 2500 taxa identified. From what I understand, this most recent release of the software works a bit like KrakenUniq in reducing the false positive rate. Do you think this change could explain such a big difference in the number of taxa identified? In your experience, do the most recent versions of Kraken2 indeed perform better? Would there be any acceptable reason to keep using an old version?

*Thread Reply:* To add to that: With the new version of Kraken2, we recover considerably fewer oral taxa from our community (40 vs 120).

*Thread Reply:* Hi everyone! I've been thinking about this question a bit since I'm currently using Kraken2 but have seen how Kraken1+KrakenUniq was a preferred alternative during SPAAM3. I found the github page with the changes in each version released (https://github.com/DerrickWood/kraken2/blob/master/CHANGELOG.md) but I don't understand it well enough so I don't know if these changes are more likely to affect false positive detection. Would it be possible to get advice on this? A friend and I in our group are the only ones doing metagenomics and we're kind of towards the end of our PhD so now is a good time to decide whether it's ok to stay with Kraken2 or if we should just reconfigure our reference database for Kraken1 and KrakenUniq. If Kraken2 now behaves a bit less wildly with false positives that would be a faster solution for us since all that's needed is a software update!

*Thread Reply:* @James Fellows Yates if you get a second could you please do your tagging people who might know on this post? Obvs no rush!

*Thread Reply:* Oooff...

*Thread Reply:* I'm not really sure. Generally I would use the latests tools, but I've really used kraken2...

*Thread Reply:* @Nikolay Oskolkov or @Maxime Borry maybe?

*Thread Reply:* @Nikolay Oskolkov was doing some comparisons between KrakenUniq and Kraken2 if I remember correctly.

*Thread Reply:* But the official word is that Kraken2 (since version 2.1.0) with the --report-minimizer-data flag should produce the same results as KrakenUniq

*Thread Reply:* Thanks Maxime 🙂

*Thread Reply:* Thanks everyone. Any first hand experience comparing the new version of Kraken2 to the older one? I am happy about the reduction of the overall number of taxa detected (as obviously the previous version was recovering lots of spurious hits) but the loss of 2/3 of oral taxa is scary…

*Thread Reply:* Did you use the same database & database version?

*Thread Reply:* Maybe just many more genomes added and pushed hits further the tree?

*Thread Reply:* No, new database, so we are not sure what is causing the effect. Have not tested systematically, as simply not enough time for that

*Thread Reply:* Am hoping someone has the answer 🤪

*Thread Reply:* Hi guys, a lot of things to say here, sorry for being late to the discussion. First, I do not think that newer versions of Kraken2 are more accurate than the older versions of Kraken2 @Katerina Guschanski. Instead, the growth of databases (as @James Fellows Yates asked about) with time usually results in fewer detected taxa (at the same depth of coverage threshold). Second, newer versions of Kraken2 themselves do not reduce false-positive rate @Markella Moraitou if you do not filter your output with respect to the breadth of coverage, which is provided ONLY if you use the --report-minimizer-data flag as @Maxime Borry mentioned, please note it is not a default flag. Third, even with --report-minimizer-data the breadth of coverage delivered by Kraken2 is very different from the one from KrakenUniq (if you just look at the number of unique kmers stats), however, they are correlated. In my case, after I checked ~10 human + non-human samples, the correlation slope was ~0.7 meaning that if one used 1000 unique kmers as a threshold for KrakenUniq, this should corespond to 700 unique kmers threshold for Kraken2 in order to get more or less comparable final lists of detected taxa. Fourth, if you carefully examine Kraken1 / KrakenUniq and Kraken2 papers, they clearly write (in the Kraken2 paper) that the database search they have implemented in Kraken2 (approximate minimizer search instead of exact kmer search in Kraken1 / KrakenUniq) reduces specificity of classification (how much - not clear) but offers instead superior speed and memory advantages. Moreover, the authors also mention the effect of "collision" of minimizers corresponding to different kmers (a minimizer is just a substring of a kmer), which introduces some "randomness" in the taxonomic classification from one database to another. In other words, no two Kraken2 databases are identical (obviously not good for reproducibility), so even if you use the same ref genomes, two databases built at two different time points might result in different classification results. In summary, after extensive testing Kraken2 vs. KrakenUniq I am still not convinced that the speed and memory advantages of Kraken2 can compensate for the reduction in specificity

*Thread Reply:* Brah... That would be a perfect blog post, literally just that... @Shreya @Ele 👀

*Thread Reply:* Wow, so cool and helpful @Nikolay Oskolkov! Thank you so much for the explainer!

*Thread Reply:* Thanks @Nikolay Oskolkov (and everyone else who responded)! I was not aware that the increase of database size could have this effect!

*Thread Reply:* @Maria Zicos think this will be of interest to you. @Nikolay Oskolkov as always you explain things so nicely, thank you! And yes if you fancy a writing a blog post we would love to host your expertise! Let me know and I can send you some details 🙂

*Thread Reply:* @Nikolay Oskolkov comment prompted me to have a look at the Kraken2 paper again: https://genomebiology.biomedcentral.com/articles/10.1186/s13059-019-1891-0 One additional point that I should point in favour of Kraken2: it uses spaced-seeds, meaning that it allows for mistmatches (7 out of 31 by default) in the minimizer, which AFAIK, is not the case for KrakenUniq. In KrakenUniq, no exact kmer match = no detection.

*Thread Reply:* That's really interesting, @Maxime Borry. So this could be one of the reasons for higher number of taxa detected by Kraken2

*Thread Reply:* Could be one of the reason, yes. You can also adjust this parameter to allow the number of mismatches that you want (from 0 to 0.25**minimizer length).

*Thread Reply:* @Maxime Borry for KrakenUniq / Kraken1 I would say "no exact kmer match = no assignment of the kmer", which does not necessarily mean that a read is not assigned since a read can have a number of kmers and they all "vote" for a particular taxon. But generally agree that Kraken2 is more permissive and KrakenUniq / Kraken1 is more conservative.

Hi, all! What is everyone doing to decontaminate skeletal elements before powdering? Does everyone still UV bone samples for 15-30 minutes per side? Is there any concern that doing so does more harm than good?

*Thread Reply:* Uving isn't actually AS bad as some people thingk.

*Thread Reply:* @Christina Warinner often refers to this paper: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0013042

*Thread Reply:* It can be bad if you have wet samples + UV is strong and right next to the sample (IIRC)

*Thread Reply:* We tested UV only vs. EDTA wash only on a small subset of samples and ended up doing both, as both were removing some parts of contaminants but having little effect on endogenous (oral, using DC samples). Not very systematic results of this not very systematic experiment are presented here: https://academic.oup.com/mbe/article/37/10/3003/5848415?login=true

*Thread Reply:* We also forgot to put away our tooth samples from the lab bench once and they ended up under ceiling UV for 2 h, without any advert effects on the host endogenous content. We never published this faux pas (surprise...)

*Thread Reply:* sounds like a good anonymous twitter post 😉

*Thread Reply:* #HonestMethods

*Thread Reply:* Thank you both so much! This is very helpful 🙌:skintone2:

*Thread Reply:* With UV, it matters a LOT how close your sample is to the UV bulb. The strength really drops quickly off with distance. The main advantage of using UV in a room is that it kills all the microbes on the surfaces and so keeps the room low biomass. However, overhead UV does very little to short aDNA fragments, especially if the sample is dry and not close to the UV bulb. I think it can probably mostly be skipped. I think the EDTA wash is more useful

I have a question regarding reference sequences: doing my first steps in microbiome analysis, I naïvely thought that you could go on https://www.ncbi.nlm.nih.gov/genome/, search for your species you are interested, and download the fasta under “Reference genome”. It turns out this was wrong, e.g., for Streptococcus sanguinis, where the reference genome is SK36 but ncbi directs to SK405, which is not even a full genome. So if downloading bigger numbers of different reference sequences, where do people find them without going through the literature?

*Thread Reply:* Mmmm, I would have done the same thing as you did…. You can also check all the assemblies of a species and their sizes in bp and chose one in the median size o something like that…

*Thread Reply:* thanks, in the meantime I also noticed that even for Yersinia pestis the “reference genome” is not CO92 but A1122 (assembly), so I’m wondering if this should be used at all

*Thread Reply:* That’s strange… It was CO92 not long ago… Nevertheless, CO92 was a very bad reference to use anyway… haha

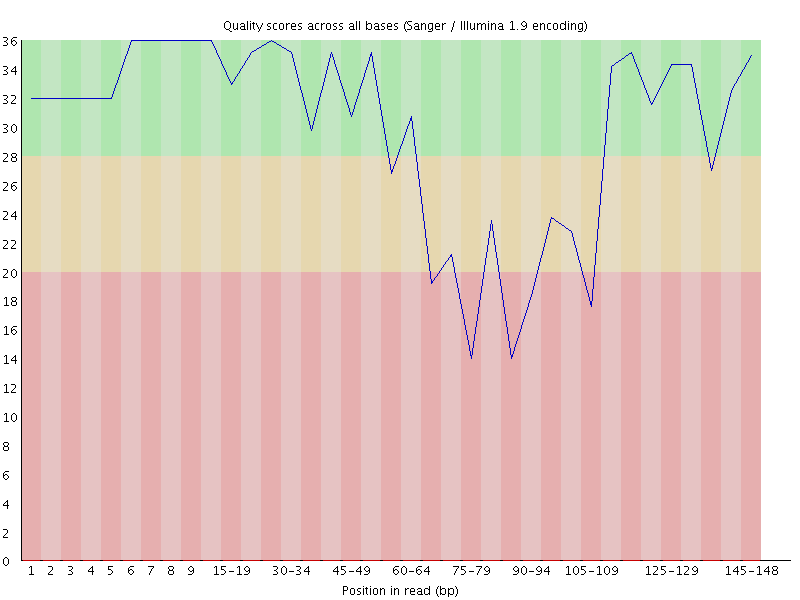

Hi all! I’m sequencing aDNA libraries prepped following a protocol derived from Rohland et al 2015's partial UDG protocol on an Illumina NovaSeq platform. The samples aren’t pooled; each library has its own Illumina adapter. I’m finding that all of my R2 reads contain 20-60% single-G repeat sequences (basically 40 Gs in a row repeated dozens to thousands of times). Has anyone seen this before, and have any idea on how to remedy it for future sequencing attempts? Thanks in advanced!

*Thread Reply:* Yes, poly-G tails are common on Illumina 2-colour chemistry machines (NextSeq/NovaSeq)

*Thread Reply:* https://github.com/OpenGene/fastp

More specifically: https://github.com/OpenGene/fastp#polyg-tail-trimming

*Thread Reply:* fastP has a specific command to clip them off (we use that in nf-core/eager)

*Thread Reply:* More info: https://sequencing.qcfail.com/articles/illumina-2-colour-chemistry-can-overcall-high-confidence-g-bases/

*Thread Reply:* Thank you so much for the info, @James Fellows Yates! Good to know it’s not just my libraries.

*Thread Reply:* Nope, perfectly normal 🙂

*Thread Reply:* Wondering why this would affect predominantly R2 reads. Any idea?

*Thread Reply:* End of the chemistry reagents, more out of sync cluster, meaning the camera can't work out what colour it is, resulting in 'nothing' to be detected

Hello all, I am an archaeologist doing research with aDNA for my dissertation. This is my first experience with this type of research and I am focusing on microbiome analysis from historic calculus samples. I have just received my sequenced data from the lab and I wanted to ask if either 1) someone might be willing to help me get started with analysis, and/or 2) might know of anyone/anywhere that might offer analytical services for this type of research (ideally someone who would work with me collaboratively so I could learn as well)? I am working with a microbiologist at my university, but there is no one on campus with aDNA experience, and my efforts to find external help have not been successful. I've discussed some particulars of my project with some other graduate students I met at SPAAM, but many of the programs are already setup for analysis on their lab network. Using my research questions as a guide, I have loaded all of the necessary programs for analysis to my computer, I'm just not sure where to go from there in getting things setup. I am a beginner at this, but I am eager to learn and I appreciate any guidance that anyone is willing to provide. Thank you!

*Thread Reply:* You could check the summer-school we made this year to get you started: https://github.com/mpi-sHH-SummerSchool/dag-material

*Thread Reply:* and https://youtube.com/channel/UC4ieuUEHUqYQGQF_DKIwcKA

*Thread Reply:* Thank you, I will definitely check out those resources.

I feel like there are lots of Krakenuniq questions, do we need a whole channel just for it? I’m interested in building as comprehensive a database as possible. The krakenuniq default “nt” option is only the microbes in nt— should I be manually downloading the entire “nt” from the blast server, running dustmasker on it, and kraken-building it from there?

*Thread Reply:* Nah 9 domt think it's necessary, would be overkill. What would be good is a blog post or community contribution to the krakenuniq docs

*Thread Reply:* @Shreya and @James Fellows Yates, yes, this is exactly what I do. I download the whole blast nt database and build a KrakenUniq database out of it. This however would only be beneficial (compared to the microbial NT from KrakenUniq) if you want to also screen for eukaryotes in addition to microbes. However, with sediment aDNA becoming very common (next will be catching aDNA from the air of Denosova cave via filters 🙂 ), adding eukaryotes to a database seems unavoidable to me. The problem here however is the very poor quality (or unequal quality) of eukaryotic (more specifically, mammalian) reference genomes in the blast nt database, this might lead to such mammals as horse, wolf, mouse and pig always present if you classify or align reads from another mammal (which is a pure artifact of unequal quality of mammalian ref genomes in the blast nt database). However, the poor quality of mammalian ref genomes in the blast nt database makes it technically possible to run classification or alignment (since the whole blast nt is only ~300 GB so not so much RAM is needed). If one needs to use good quality ref genomes from ~300 mammals, that would be close to impossible to use for alignment or classification (in terms of memory resources, because it would require a few TB of RAM)

*Thread Reply:* Well, I am back with more questions to annoy you with, apologies and thank you in advance @Nikolay Oskolkov! I do indeed want to include eukaryotes--humans in particular and at least being able to detect a potential mammal! I have downloaded nt.fna.gz and run dustmasker on it and now realizing I need to make the seqid to taxid mapping file in order to build the database. If I download nuclgb.accession2taxid and nuclwgs.accession2taxid, concatenate them, and pull the accession and taxid columns, would that work? Seems like the krakenuniq-download command would handle all of this for me but leave out the eukaryotes…

*Thread Reply:* Oh dear and I have to add a third column if i want to use the --taxids-for-sequences and --taxids-for-genomes! 🤯

*Thread Reply:* @Shreya yes, you need nuclgb.accession2taxid and nuclwgs.accession2taxid files but also names.dmp and nodes.dmp files, if I remember correctly. You know what, the simplest would be if you use

kraken2-build --download-library nt --db FULL_NT

this will download the full NT (including eukaryotes, invertebrates etc.) with all the mapping files needed. Despite you use Kraken2 for this purpose, you will build the KrakenUniq database using something like this:

krakenuniq-build --db FULL_NT --kmer-len 31 --threads 80 --taxids-for-genomes --taxids-for-sequences

@Abby Gancz you might also find this thread useful because you are also building a KrakenUniq database

*Thread Reply:* 😮 I hadn’t even thought of using kraken2 to download!! Brilliant!! Thank you so much Nikolay!!

Hi Friends! I have a question about library method choice for coprolite metagenomics, which I feel is applicable to general experimental design. My background/setup: I am doing dietary/paleoenvironmental metagenomics on ancient sloth coprolites for my PhD. I started the work with 7 samples, experimenting with extraction methods, and doing double-stranded libraries, which I screened. I determined the best extraction method from those (PowerSoil kit > Plant mini kit or Dabney adapted for tissue, which is the standard my lab uses for non-bone). My conundrum: I have now got five more coprolites, which I extracted only with the PowerSoil kit (because there's not too much point with the other methods). My group is looking into changing from Meyer-Kircher libs, potentially towards single-stranded protocols, likely SCR. I'm wondering whether I should still do these five libraries with Meyer-Kircher to keep consistency with the rest of the study, or whether it's better to just go with whatever is likely to yield more data. I feel the latter is a more responsible a way to use up a paleontological sample (always maximise outputs), but I'm worried that maybe using a different library protocol, especially moving from double-stranded to single-stranded, will impact the DNA community in the sample quite a bit and make these new samples not comparable with the previous ones... My questions to you all: Are some of you working with single-stranded libraries on metagenomics? How has that impacted your results? Does recovering ssDNA do cool things for detecting more taxa? Have you had trouble with mixed datasets of double and single-stranded libraries? What would you advise me to do? Do you think my choice could impact "publishability" of the work? In an ideal world, I think my instinct would be to try these new libs with the ss protocol, and if it improves yields etc. as expected, re-do the previous 7 samples with this new protocol too, and re-screen them. Only this chapter is currently not a spending priority (I'm doing a lot of popgen stuff too) so I don't know if I can afford re-building and re-sequencing the old 7 samples.

Hi @Maria Zicos

It's a very good question. No one has done a systematic study for ancient Metagenomics (low hanging fruit for a good publication I think ;))

For calculus I did a tiny bit of experimentation and it didn't make much difference when doing microbial genome construction (both very old and more recent).

It depends on your question ultimately I think. Do you want to do Metagenomic de novo assembly? In this case having DS libraries are probably better as you get more longer (still ancient!) reads, whereas SS will increase the proportion of very short but unusable reads for assembly.

However if you're looking for dietary DNA or host DNA, it would make more sense maybe to Indeed switch to SS as you will pick up a greater proportion of true endogenous DNA, which you can use for reference based mapping approaches.

I don't know of any study that estimates the amount of increase you may get for SS. I would maybe refer you to my normal poop question person @Alex Hübner, but he often is dealing with samples with unusual preservation. I don't know if he has SS libraries

Hi @Maria Zicos, That’s indeed a very interesting question and I guess no one has answered yet. I agree with James’ classification of the use of DS and SS libraries. If you are mainly interested in doing reference-based analysis, you should try SS libraries, however, they won’t be off much help for assemblies. Many of the palaeofaeces samples, we have processed in our lab that relatively good preservation but also were excavated at archaeological sites that favour preservation (low-water exposure environments) and we always only did DS libraries. Regarding the design of your experiment, in case you figure out that SS libraries are far superior over DS libraries, you should go back to re-process the old coprolites, too, if possible. You have a relatively low number of samples in your study and consistency in the preparation would increase the power for statistical analyses.

Is there any reason you could not perform Kircher et al. 2012 style dual-indexing with indexing primers of different lengths? For example, could I use a forward indexing primer with an 8 nucleotide index and a reverse indexing primer with a 7 nucleotide index?

*Thread Reply:* I don't think there would be an issue with building it (although I also might be wrong there, idk), but I think it might make data processing more difficult? I could be wrong, but I would imagine you would need to only use the first 7 nucleotides of the 8-nt index when demultiplexing (which could be an issue if that last base is the only difference between two indices) and then you'd have to trim an additional base

*Thread Reply:* This is what I am not sure about, because I have no experience with demultiplexing. I thought maybe as long as you specify the index seqs in the sample sheet it wouldn’t matter if they were different lengths.

Does anyone know how the spreadsheets that come out of the HOPS pipeline are created? We just noticed that the species listed in these spreadsheets aren't always the same when comparing different runs with different individuals, and read numbers and species listed in these spreadsheets are also not always the same as the read numbers and species present in the rma6 file for the same library (which I'm guessing has to do with that not being filtered through yet?). At first I thought it was maybe just whatever species had hits were listed in the spreadsheet, but a lot of the time there are 0 reads listed for some of these species, so I'm not sure. Is it that there are some species hard coded into the spreadsheet and others that just make it into the spreadsheet when there are hits in the rma6 file? Any insight on how the program decides which species and reads make it into these output spreadsheets is appreciated, thanks!

Answer from @aidanva and I (she's sitting next to me)

1) rma6 contains everything - consider this raw 2) hops will only report hits of stuff your taxon list that you specify. 3) However it also looks below that node (I think two levels) and report those as well (depends on the discuss). With the MALT LCA it may not push reads higher up the tree if it's unique at that node 4) Megan may not agree with hops because hops does additional filtering by default such as destacking and deduplication that will reduce the numbers of reads

Also Aida says in a given HOPS table, it will show all taxa that has been found in the run across all samples (even if that particular sample doesn't have his to it)

Someone remembers the explanation of why “porcine type-c oncovirus” is a typical taxonomic hit in metagenomics?

*Thread Reply:* If it’s a guessing game, I would place my bets on the endogenous retrovirus explanation (apparently it’s a virus that can be integrated in mammalian genomes), and/or a genome contamination (since Sus scrofa appears quite a few times in Conterminator)

*Thread Reply:* My response too! I also wonder if it could be a model organism or something...

*Thread Reply:* But more likely the retro virus

*Thread Reply:* it is just that I have a group of saliva samples, all taken and processed in the same way, but I only get the hit in some of them… so that’s the tricky part… Once would expect to find them in all or most of them… But well, of course there are many technical biases that could explain this as well…

Hello everyoneI'm writing to you because I'm doing my master thesis on a permafrost analysis. I am working on samples of a very old permafrost soil from Greenland. I have extracted some DNA from it. However, I had a very small biomass and I don't think I have much DNA. After extracting the DNA I did PCRs on the 16s of the bacteria to check if there was any in my sample and I had bacterial DNA in my samples. After that, I wanted to know the DNA concentration of my extractions.

And I could get these results:

> Sample Concentration (ng/µL) A260 260/280 260/230 > 226 4.8227 0.096 2.119 0.088 > 227 6.063 0.1213 2.073 0.044

All the numbers correspond to what we expect (very low absorbance at 260nm, but i think it is normal the biomass is very low and even more the DNA) But the 260/230 ratio is very strange, it should be around 2, and here it is really tiny. Have you ever had this kind of case with ancient DNA? What is also possible is the presence of many soil phenols in my sample. But could the presence of degraded ancient DNA have any influence on this ratio?

Thank you in advance for your answers!

*Thread Reply:* **My project consists in sequencing the metagenome of the organisms still living in this soil.

*Thread Reply:* Hi @Louis Lhote that's somewhat to say. A first caveat is that the vast majority of people here so shotgun sequencing not (16S) PCR

*Thread Reply:* For the ratios I know @Zandra Fagernäs @ivelsko might have some knowledge as they've talked about it in our group meetings in the past.

More specifically for sediment in general: @Pete Heintzman @Vilma Pérez @Barbara @Anan Ibrahim @Linda Armbrecht might have some experience

*Thread Reply:* Hi Louis, the low 260/230 suggests one of 2 kinds of contamination: guanidium salts, or protein

*Thread Reply:* Both can be cleaned up some by washing, but with such low DNA concentrations you risk losing most of it

*Thread Reply:* Hey, I plan on doing some shotgun sequencing afterwards. But the PCR was only to verify the presence of live bacterial DNA (the pcr gave fragments of about 500 bp which excludes ancient degraded dna) I want to be sure of the quality of my DNA before sequencing it Thanks!

*Thread Reply:* That's really low for post-PCR values though. How did you clean up the PCR product before you took the 260/280/230 readings? And what did you take the readings with? NanoDrop? Qubit?

*Thread Reply:* Ok thanks! @ivelsko do you think it is still possible to do a sequencing with this kind of contamination ? This is only the raw result after extraction of the dna from the soil. The PCR was only used to ensure the presence of large dna in my sample. I calculated this value with a nanodrop

*Thread Reply:* Ah, ok. With values this low, the NanoDrop isn't very accurate and it's better to take readings with a Qubit. Do you have access to one? If so, re-run your extracts through that and you'll have much more accurate values

*Thread Reply:* If you can't use a Qubit, I think you can still proceed with library building with this little DNA

*Thread Reply:* The subsequent clean-up steps and amplification should be enough to remove the contamination and bring up the DNA concentration

*Thread Reply:* @ivelsko Thank you for this information! I normally have access to a Qbit. I will try to see what I can get as a value with it. What is the risk of doing a sequencing without cleaning my sample? Can it create bias?

*Thread Reply:* The main issue will probably be poor library construction, so your library might be biased and then your sequencing results will be too. It sort of depends on the method you use for library construction, some will likely be more affected than others

*Thread Reply:* Since you're looking at the aDNA you'll probably be using the Meyer&Kircher protocol? I think that will be less affected than say the Nextera kits that use an enzyme to shear the DNA

*Thread Reply:* @ivelsko I'm actually going to do a Pacbio metagenome sequencing (I've never done one so I'll check with my lab what they use). The goal is to have the dna of organisms still living in the soil.

*Thread Reply:* I've never done any PacBio sequencing, so I'm not familiar with what they want for input quality. It's definitely good to ask the sequencing center what they expect of sample quality for high-quality sequencing data

*Thread Reply:* @ivelsko ok thanks! I'll ask

*Thread Reply:* Hi @Louis Lhote What type of material is your sediment? i had a similar problem with sediment samples from the deep layers (~20m). They had a particularly high level of clay content which i presume always affected the ratio readings of my nanodrop.

*Thread Reply:* Hi @Anan Ibrahim it is permafrost core so a very dry and cold soil. Thanks for your response! I think it is the same problem

Hi! in the lab we advanced quite a lot in processing our aDNA data with nf-core/eager. It is going quite well, but we see some bias and some metrics (with fastQC after clipping and damaeprfiler) that disturb us. We are now in the great moment when you need to undestand the effect of parameters at the different steps. I have a couple of specific question about parameters for Adapter removal and damaprofiler. Better to ask here or in nf-core/eager slack channel? Thanks

*Thread Reply:* If it's specifically questions about Adapter removal and damage profile, this is not eager specific so here should be fine

*Thread Reply:* Great! so, as a starter...

- in nf-core/eager the minimum adapter overlap required for clipping is set to 1 by default, when usually it is preferred the Adapter Removal default value (0). Why did you choose such a default parameter value??

- Also the Specify minimum base quality required for clipping is by default 20. Some people prefer to lower down that threshold down to even 2, so they keep potentially informative reads here and non informative will be removed when mapping because of the mapping quality. What are you thoughts on that? Thanks 🙂

*Thread Reply:* Uhh, can you clarify number 2 - do you mean quality clipping?

*Thread Reply:* You say adapter overlap twice

*Thread Reply:* But 1) is a left over from eager 1. There was in house testing that suggested 1 worked best. I personally don't think it's a very good idea but no one has complained so far. It's more aggressive but cleans up reads more, I guess.

*Thread Reply:* For quality trimming 20 refers to base quality score. I've never heard people going down to 2, that doesn't make sense to me because that means you would keep extremely low confidence base calls in your reads. I've only heard people go higher than 20...

*Thread Reply:* You were right for 2... base quality 😬

*Thread Reply:* for 2). I totally agree with you, and I will stick to this. but here is a quote from a Science paper (as usually digging into the Supplementary Material to get those details)... "AdapterRemoval v1.5.3 (60) was used to trim Illumina adapter sequences, leading Ns (-- trimns) and trailing quality 2 runs (--trimqualities --minquality 2) from both single- and paired-end reads."

*Thread Reply:* Thanks James, so I will definitely increase the min adapter overlap and I'll stick to the min base quality of 20🙌

*Thread Reply:* Which paper is that? That looked like it could be a typo

*Thread Reply:* Quality 2 runs sounds weird too

*Thread Reply:* But yeah no harm in increasing the number of overlap at all.

*Thread Reply:* Do you want some names? haha doi:10.1126/science.aav2621

Yes I was wondering too if it could be a typo. But Nico then told me it could be to follow the strategy I mentioned before of not be so stringent at that step and remove later when mapping

*Thread Reply:* yes, as far as I remember some people in CPH where using --minquality 2 for AdapterRemoval, I guess maximize number of reads that get mapped, despite the quality score… But I agree on keeping quality filter at 20 for our analyses

*Thread Reply:* I guess it depends how much coverage you have.

If you have low coverage it massively risks getting false SNP calls. If you have higher coverage is not as bad, maybe it's worth the trade off to get ultimately higher confidence

*Thread Reply:* I agree… It would depend on the case and how many supporting reads there are by position.

*Thread Reply:* But 2 is very low, it's a 63% base miscalling probability.

*Thread Reply:* I know, but if you check, several papers from CPH have used this parameter

*Thread Reply:* @Åshild (Ash) do you happen to have any insight on that (I know you don't really work in those groups, but maybe you've heard/been in discussions?)

*Thread Reply:* I think it is used because of something related to the error probability of base calling with the older Illumina HiSeqs, and that that was a parameter used by Illumina. But I’m not entirely sure.

Have any UK-based people had a hard time getting KAPA HiFi HotStart ready mix? Do any UK-based suppliers sell it?

*Thread Reply:* Is there any lab-based (or otherwise) product that UK is not short on currently?

*Thread Reply:* lol fair. but I can’t even find a provider that’s “out of stock”

*Thread Reply:* If anyone ever comes across this post with the same question, I could not find a way to order it without directly contacting Roche and getting a quote and placing a sales order. Farzad is the man and can help you meet all of your KAPA needs: farzad.javad@roche.com

Hello experts! Would it theoretically be possible to identify the geographic origin of plant remains recovered from dental calculus (let's say Medieval/post-medieval) from their DNA? And what would the practical challenges be? (I'm assuming fragmented DNA and the overwhelming oral signal would cause issues?)

*Thread Reply:* Theoretically yes.

You would need sufficient DNA reads/coverage on loci on the plant genome that you can do populatio ngenetics - assuming the given species has suffcient structure diverstiy that it can be linked with location

*Thread Reply:* Primary issue would indeed be overwhelming oral signal meaning it's difficult to retrieve the plant reads

*Thread Reply:* Theoretically is all I need for a grant application 😛 well, some grants...

*Thread Reply:* I mean theoretically yes, but a lot of plants have enormous genomes with lots of repetitive elements, so without a huge amount of plant reads/a very successful capture, it will be... Challenging. To say the least.

*Thread Reply:* Yeeeeeaa thought so... Any insight on protein-markers that could be useful @Zandra Fagernäs?

*Thread Reply:* Wouldn't you need a "genetic atlas" of plants to project your sample onto? I mean, in human genetics you can predict ancestry of an individual e.g. based on a PCA on genotypes from thousands of other human individuals with known ancestry

*Thread Reply:* That's what I mean 'on loci on the plant genome that you can do pop-gen'

Hi, all. Does anyone know why the Meyer and Kircher 2010 blunt end direct ligation protocol is used preferentially with aDNA? Does anyone do blunt end lib preps with a-tailing and Y-shaped adapters with aDNA? Is blunt end direct ligation more efficient?

I think the Orlando group may have experimented with this a bit: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0078575 (I don't think that directly addresses your question though)

Yes, many groups had serious problems with aDNA and TA ligation. Blunt end ligation was found to work better empirically

*Thread Reply:* Do you know of any other papers that describe that?

*Thread Reply:* Or is the Orlando one the main one?

*Thread Reply:* Thats the published one

*Thread Reply:* Has somebody tried the Santa Cruz Reaction Protocol?

*Thread Reply:* @Katerina Guschanski maybe ask on #general?

*Thread Reply:* Thanks so much, @James Fellows Yates and @Christina Warinner! This is good to know. I imagined there must be a reason why blunt end ligation is used almost exclusively in aDNA despite AT ligation being the mainstream method in genomics.

*Thread Reply:* @Katerina Guschanski Yes, we (Tom Gilbert’s group) switched to using SCR for all aDNA work in 2020, it works really well in my experience 🙂

*Thread Reply:* Brilliant! Any chance you have a protocol you'd be open to share? We'd like to test it in comparison to our standard double-barcode double-index protocol

*Thread Reply:* There is a full protocol supplied by the authors in their supplementary material https://oup.silverchair-cdn.com/oup/backfile/Contentpublic/Journal/jhered/112/3/10.1093jheredesab012/1/esab012supplsupplementarymaterial.pdf?Expires=1649091871&Signature=QTyxLLQOb1QgZ-YTCdBng8xd9rC3rZul8qX7-dUD18KIFRN~GjkQyemPD0mRfv11fcwJQTjfxl9su8u8XvdC-xUKDf5C5fv8X5xi~D7tY0pW04NZNcZh5dhrEmo46Oc0Oc3nMQ0hvm0VqvfGAq9qmPkHccrQKtgzdtBPghfMBHcfsWYToYRAOq2OilKaQ8dCUJ0gm9ewLL9wh4vrrperSEKxdTaCe~amI-K-Vo0fD0HFN8EN32K7ecx1qD8M~ngq68dm73tAI9rt6ZzMPZzZ6nNeJC-xDI5o~ZbtlJ2DGPIyEjSoOhwNkIeGjBnb0gpWls-rtdg6NdyCNHg77r-X4A&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA|https://oup.silverchair-cdn.com/oup/backfile/Content_public/Journal/jhered/112/3/10.1093[…]ls-rtdg6NdyCNHg77r-X4A&Key-Pair-Id=APKAIE5G5CRDK6RD3PGA which is the same as the one we use 😉

*Thread Reply:* That's rare that a protocol from the paper can be taken and applied without tweaks 🤪

*Thread Reply:* Thanks a lot, @Åshild (Ash)

*Thread Reply:* The only major difference is that we do a SPRI bead clean-up after the library build instead of MinElute cleanup.

*Thread Reply:* Any specific reason why?

*Thread Reply:* it’s faster and less pipetting

*Thread Reply:* Hahaha, true that!

*Thread Reply:* The protocol they provide in the paper is really good and explains the steps well , it’s true that many of the other protocol papers out there are often lacking in detailed explanations.

*Thread Reply:* That's really comforting to know

*Thread Reply:* We had issues with the Santa Cruz protocol. We got a lot of primer dimmers. @Maria Lopopolo or @Miren Iraeta Orbegozo (@Iraeta Miren) can give more details about the issues we had, as it was them that did it. @Betsy Nelson on the other hand has just spent some time in California learning the protocol and may be able to give some details on the tricks to make it work. But for us, our first shot was not very good.

*Thread Reply:* @Nico Rascovan did you QC the adapter and splint oligos before using them?

*Thread Reply:* we QC’ d the splits and adapters. I think this library prep success it’s really dependant upon the type of sample and how you can fine tune beads clean-up and the c1-c5 dilutions e.g. more stringent beads to DNA ration post adapter libration or adding less adapters (e.g. one step below suggestion) at ligation step. I think Miren is now testing a few of these adjustments and was seeing less dimers 🙂

@Miren Iraeta Orbegozo has joined the channel

Hi folks — I’m wondering if anyone else has been having trouble (or has solved the issue of) downloading ENA files which give FTP directory errors recently? Trying both ENA’s enaDataGet scripts and wget directly (and across different projects), I sometimes get Error with FTP transfer: <urlopen error ftp error: error_perm('550 Failed to change directory.')>. Googling suggests that this often happens when the directory doesn’t exist or perhaps in the case of permissions issues, which is not something I could resolve on my own ofc 😕

*Thread Reply:* Hey Olivia, can you post a few example commands to see if we can replicate this?

*Thread Reply:* The ENA servers are sometimes a bit shaky, so it could just be a connection issue - particularly if you're trying to download from the US.

*Thread Reply:* You could consider trying to download stuff with pipelines such as nf-core/fetchNGS which does some retrying mechanisms for you.

Or even try: https://github.com/ncbi/sra-tools/wiki/HowTo:-fasterq-dump

which should be able to download ENA data but from the SRA mirror of the ENA (as SRA/ENA basically mirror each other and has the same data)

*Thread Reply:* Ooh thank you for the tool recommendations, those are good to have and I’ll check them out! The good news is that I had a labmate test it and I also tried it locally, both of which succeeded, so ENA isn’t the issue. The bad news is that my profile on our advanced computing cluster is still being rejected! But that’s a problem for our computing team, so I’ll leave it at that. /endthread

Hey all, wondering if anyone has got experience working with sourcepredict, and might be able to help me? Just keep getting an error and I don't know what I'm doing wrong.

It seems like step 1 runs smoothly and it tell me something like:

- Sample: 7309-02minikrakenAll_report known:98.61% unknown:1.39%

But then it tries to run step 2, and it keeps saying: Traceback (most recent call last): File "/home/vanme090/.local/bin/sourcepredict", line 8, in <module> sys.exit(main()) File "/home/vanme090/.local/lib/python3.8/site-packages/sourcepredict/main.py", line 172, in main sm.computedistance(distancemethod=distancemethod, rank=RANK) File "/home/vanme090/.local/lib/python3.8/site-packages/sourcepredict/sourcepredictlib/ml.py", line 260, in computedistance tree = ncbi.gettopology( File "/home/vanme090/.local/lib/python3.8/site-packages/ete3/ncbitaxonomy/ncbiquery.py", line 463, in get_topology root = elem2node[1] KeyError: 1

I've tried different Python packages, but they all give me the same error. To get the taxid sample files, I run kraken with (tried both the standard and the minikrakenv28GB databases). It looks like ete3 can't get the ncbi taxonomy, and therefore it also crashes further down the line? Anyone got any ideas what I'm missing/doing wrong?

Hello all, I am looking to test for positive selection using the branch-site model in codeml (PAML), but I am a bit confused with some parameters in the control file (I've understood the main ones including NSsites and model but I am unsure about some of the others). I was wondering if anyone had experience with this, and if they would be happy to have a quick chat or message exchange to confirm my understanding? Thank you!

*Thread Reply:* ooofff, not heard of that tool unfortunately...

@aidanva @Arthur Kocher @Meriam Guellil? I guess you guys work with phylogenies relatively regularly... any ideas?

*Thread Reply:* Sorry have never used that tool either 😶

*Thread Reply:* Thank you all for you replies, and no worries 🙂 I will go by trial and error and see where it leads 😊

*Thread Reply:* Hi Ophelie! I think that Antony had worked with PAML before

Hi all! has anyone here worked with Metaphlan3? I just analysed a sample but got no classification at all: ```#SampleID Metaphlan_Analysis

cladename NCBItaxid relativeabundance additional_species

UNKNOWN -1 100.0``` but I got classification results with Metaphlan2, has anyone had this same issue?

*Thread Reply:* I know @Alex Hübner

*Thread Reply:* Yes, I had some sediment samples that still had something like five species for MP2 but no species in MP3. They completely changed the marker-gene database and there are some species that were dropped during this process.

*Thread Reply:* oh really…. that doesn’t sound good at all… It’s weird though that in my case M2 reported some kind of common fungi but M3 didn’t detect anything… let me show you a snippet:

#SampleID Metaphlan2_Analysis

k__Eukaryota 78.741

k__Bacteria 21.259

k__Eukaryota|p__Ascomycota 78.5878

k__Bacteria|p__Proteobacteria 19.33983

k__Bacteria|p__Actinobacteria 1.91918

k__Eukaryota|p__Apicomplexa 0.1532

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes 67.16866

k__Bacteria|p__Proteobacteria|c__Alphaproteobacteria 19.33983

k__Eukaryota|p__Ascomycota|c__Schizosaccharomycetes 7.90379

k__Eukaryota|p__Ascomycota|c__Sordariomycetes 3.51535

k__Bacteria|p__Actinobacteria|c__Actinobacteria 1.91918

k__Eukaryota|p__Apicomplexa|c__Coccidia 0.1532

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Eurotiales 66.18799

k__Bacteria|p__Proteobacteria|c__Alphaproteobacteria|o__Rhizobiales 19.33983

k__Eukaryota|p__Ascomycota|c__Schizosaccharomycetes|o__Schizosaccharomycetales 7.90379

k__Eukaryota|p__Ascomycota|c__Sordariomycetes|o__Hypocreales 2.03864

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales 1.91918

k__Eukaryota|p__Ascomycota|c__Sordariomycetes|o__Sordariales 1.4767

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Onygenales 0.98067

k__Eukaryota|p__Apicomplexa|c__Coccidia|o__Eucoccidiorida 0.1532

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Eurotiales|f__Aspergillaceae 66.10824

k__Bacteria|p__Proteobacteria|c__Alphaproteobacteria|o__Rhizobiales|f__Bradyrhizobiaceae 19.33983

k__Eukaryota|p__Ascomycota|c__Schizosaccharomycetes|o__Schizosaccharomycetales|f__Schizosaccharomycetaceae 7.90379

k__Eukaryota|p__Ascomycota|c__Sordariomycetes|o__Hypocreales|f__Nectriaceae 2.03864

k__Eukaryota|p__Ascomycota|c__Sordariomycetes|o__Sordariales|f__Chaetomiaceae 1.4767

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales|f__Pseudonocardiaceae 1.25179

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Onygenales|f__Ajellomycetaceae 0.74471

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales|f__Propionibacteriaceae 0.66739

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Onygenales|f__Onygenales_noname 0.23595

k__Eukaryota|p__Apicomplexa|c__Coccidia|o__Eucoccidiorida|f__Eimeriidae 0.1532

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Eurotiales|f__Trichocomaceae 0.07975

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Eurotiales|f__Aspergillaceae|g__Aspergillaceae_unclassified 66.10824

k__Bacteria|p__Proteobacteria|c__Alphaproteobacteria|o__Rhizobiales|f__Bradyrhizobiaceae|g__Bradyrhizobium 19.33983

k__Eukaryota|p__Ascomycota|c__Schizosaccharomycetes|o__Schizosaccharomycetales|f__Schizosaccharomycetaceae|g__Schizosaccharomyces 7.90379

k__Eukaryota|p__Ascomycota|c__Sordariomycetes|o__Hypocreales|f__Nectriaceae|g__Fusarium 2.03864

k__Eukaryota|p__Ascomycota|c__Sordariomycetes|o__Sordariales|f__Chaetomiaceae|g__Chaetomiaceae_unclassified 1.4767

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales|f__Pseudonocardiaceae|g__Saccharopolyspora 1.25179

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Onygenales|f__Ajellomycetaceae|g__Ajellomyces 0.74471

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales|f__Propionibacteriaceae|g__Propionibacterium 0.66739

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Onygenales|f__Onygenales_noname|g__Onygenales_noname_unclassified 0.23595

k__Eukaryota|p__Apicomplexa|c__Coccidia|o__Eucoccidiorida|f__Eimeriidae|g__Eimeria 0.1532

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Eurotiales|f__Trichocomaceae|g__Talaromyces 0.07975

k__Bacteria|p__Proteobacteria|c__Alphaproteobacteria|o__Rhizobiales|f__Bradyrhizobiaceae|g__Bradyrhizobium|s__Bradyrhizobium_sp_DFCI_1 19.33983

k__Eukaryota|p__Ascomycota|c__Schizosaccharomycetes|o__Schizosaccharomycetales|f__Schizosaccharomycetaceae|g__Schizosaccharomyces|s__Schizosaccharomyces_unclassified 7.90379

k__Eukaryota|p__Ascomycota|c__Sordariomycetes|o__Hypocreales|f__Nectriaceae|g__Fusarium|s__Fusarium_unclassified 2.03864

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales|f__Pseudonocardiaceae|g__Saccharopolyspora|s__Saccharopolyspora_unclassified 1.25179

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Onygenales|f__Ajellomycetaceae|g__Ajellomyces|s__Ajellomyces_unclassified 0.74471

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales|f__Propionibacteriaceae|g__Propionibacterium|s__Propionibacterium_acnes 0.66739

k__Eukaryota|p__Apicomplexa|c__Coccidia|o__Eucoccidiorida|f__Eimeriidae|g__Eimeria|s__Eimeria_tenella 0.1532

k__Eukaryota|p__Ascomycota|c__Eurotiomycetes|o__Eurotiales|f__Trichocomaceae|g__Talaromyces|s__Talaromyces_unclassified 0.07975

k__Bacteria|p__Proteobacteria|c__Alphaproteobacteria|o__Rhizobiales|f__Bradyrhizobiaceae|g__Bradyrhizobium|s__Bradyrhizobium_sp_DFCI_1|t__GCF_000465325 19.33983

k__Bacteria|p__Actinobacteria|c__Actinobacteria|o__Actinomycetales|f__Propionibacteriaceae|g__Propionibacterium|s__Propionibacterium_acnes|t__Propionibacterium_acnes_unclassified 0.66739

k__Eukaryota|p__Apicomplexa|c__Coccidia|o__Eucoccidiorida|f__Eimeriidae|g__Eimeria|s__Eimeria_tenella|t__GCA_000002835 0.1532

*Thread Reply:* I am not familiar about the changes with respect to the eukaryotes because I don’t use MetaPhlAn for this purpose. However, you have a lot of unclassified assignments and I also saw that I more or less lost all species in MP3 that were named unclassified in MP2.

In the end, it’s up to you to decide what you want to gain from this analysis. MP3 has a higher number of species-specific marker genes, but this might lead to be less sensitive of picking up traces of species. Kraken2 might be a good alternative for this.

There has been recently a new preprint that evaluates the databases for both programs. I haven’t read it myself yet, but it might give some inspiration: https://twitter.com/RobynJWright/status/1519826469610041344?t=e14DGzJS87jjE6EWY7fpOQ&s=09

*Thread Reply:* awesome, thanks for sharing @Alex Hübner 🙂

Can anyone recommend shotgun metagenomes for lab air and/or lab surfaces (not necessarily an ultra-clean lab) that I can use to run through sourcetracker?

*Thread Reply:* Hi Bjorn, we used Salter et al. 2014 for this purpose (Accession numbers ERR584320 ERR584333 ERR584341 ERR584348)

Hi everyone ! I will perform a metagenomic analysis on several individuals already sequenced for demographic studies in my lab. What threshold should I use to create the sample list? We thought about only keeping the samples that were 1% endogenous human because this is the threshold we use to decide to sequence more or not in general. I used a threshold of 1X depth human for former projects and we realised that that threshold was too high but I fear that 1% endogenous human might be too low to be able to detect the actual presence of, say, a pathogen.

*Thread Reply:* What type of metagenomic analysis do you want to do? For pathogen detection, generally speaking I don't orient myself on human DNA content. You can have horrible human genome coverage but still get a good pathogen hit. Depends on the sample. But maybe others have used similar thresholds before?

*Thread Reply:* Hey Meriam! I think that for the moment I will focus on pathogens because I don’t really know how to analyse a microbiota yet

*Thread Reply:* Does it mean that when you look for pathogens, you analyse even the individuals that were just screened ? May I ask, what threshold do you use then to know when to capture or sequence more of the pathogen you believe is in there ? Or what would be a good pathogen hit ? (Sorry for the basic questions)

*Thread Reply:* If you just want to look for pathogen I would just have a look at all the samples you have frankly. As for the likelihood of detection it depends on the sequencing depth and the organism

*Thread Reply:* So far we have used KrakenUniq as a first step in our pipeline with a threshold of 200 taxReads and 1000 kmers, so I fear that the pathogens in the individuals that were just screened would fall under this threshold because of the low depth

*Thread Reply:* So with KU you actually get a bunch of stats which can inform you on the hit quality. Imposing a set threshold for reads and kmers like this could result in missing lower hits such as viruses.

*Thread Reply:* in the original KU paper they use the kmer/reads ratio and I also normalise by coverage

*Thread Reply:* Kmer/reads ratio sounds good because it accounts for the fact that you don’t have many reads sometimes. And then you normalise by coverage. Sounds good, thank you very much ! I will try to do that 😊

*Thread Reply:* you can check my haemophilus paper from this year if you want to do that

*Thread Reply:* Like @Meriam Guellil said:

*Thread Reply:*

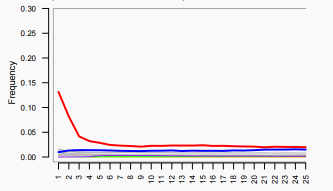

*Thread Reply:* @Meriam Guellil I could see from your presentation at SPAAM last year and your paper that you used E-value = (kmers / reads) ** cov = 0.001 threshold for filtering your KrakenUniq output. Could you please elaborate on why this kind of combination of KU stats and why this E-value threshold?

*Thread Reply:* Hi @Nikolay Oskolkov ! So the kmer/reads ratio is from the original paper of KU and you can expect the reason why it is used is that for a good hit you should have n times more unque kmers than reads. So for example if what you have is mostly overtiling it should technically be represented here. I started testing around in late 2019 (i think...) because I was switching from K2 to KU and I wasn't too happy in using reads or kmers for colouring heatmaps for example because depending on the genome size/structure etc these values or cutoffs for them could be widely different. From the tests I did back then this combination of stats ended up working best for me. Now keep in mind I use it almost exclusively for pathogen detection. So this gives me a nice cut-off value from when hits might be worth investigating even if they are low coverage and also eliminates noise in my heatmaps. The thresholds I have used are variable. 0.001 is usually as low as I go, mainly in order to still catch very low viral hits. There might be better combinations out there though, this is just what I ended up using and for my purposes it has done a great job.

*Thread Reply:* I see, thank you @Meriam Guellil! I can understand the intuition behind constructing a kmers / reads variable for filtering because "kmers" and "reads" are pretty correlated so perhaps one of them can be treated as a redundant, so one could replace the two by a new one which is their ratio. However, I do not fully understand why to multiply this ratio by "cov"? Why multiply and not sum or divide? Also, why "cov" and not "dup"? I just want to understand the intuition behind this combination of KU stats that you used

*Thread Reply:* Like I said I did try a couple of variant including dup but dup is already taken into account with the unique kmer count. The reason i use cov is because while the ration kmer / reads gives you a good indication it doesn't account for how much of the kmer dictionary for the taxon is actually covered, since the kmer numbers can differ and that way I can visualize and filter them irrespective of size

*Thread Reply:* And like I said this is what I came up with but I am sure there a multiple and maybe better ways to pair the stats

*Thread Reply:* Are you using the total kmer count for each taxon, or the unique kmer count for each taxon @Meriam Guellil?

*Thread Reply:* unique kmer count. With total kmer count do you mean for the lower ranks as well?

*Thread Reply:* kmer (including duplicated ones) assigned directly to a taxon

*Thread Reply:* KU lists number of distinct or unique kmers

*Thread Reply:* So unique kmers follow read counts (at least according to KU paper fig 3. https://genomebiology.biomedcentral.com/articles/10.1186/s13059-018-1568-0/figures/3) So shouldn’t K/R be more or less a constant ?

*Thread Reply:* @Maxime Borry this is what I mean when I say that those two are correlated, so if you know one you will find the other one, so no need (perhaps) to filter with respect to both of them, one of them (or their combination such as ratio) is good enough for filtering

*Thread Reply:* But that made me check the definition of “coverage” for K2 vs KU @Meriam Guellil, and that’s actually a different one:

• in KU, they use unique_kmers_for_clade / genome_size (https://github.com/fbreitwieser/krakenuniq/blob/2ac22bf7681223efa17ffba221231c7faac9da05/src/taxdb.hpp#L1107)

• in K2, they don’t provide it, but imply that it would be uniq_kmers_for_clade/total_kmers_for_clade https://github.com/DerrickWood/kraken2/pull/249#issuecomment-638311769

*Thread Reply:* but you can have a high read count while still having a low unique kmer count which is what they point out in the paper, so the ratio for sure makes a difference. you can just use the base ratio it one its own too

*Thread Reply:* Yes I agree with Meriam, normally the good hits have way more kmers than reads and when it’s not the case I doubt more the validity of the hit

*Thread Reply:* what can happen though is that you have a super high coverage genome in a utopic world and you have the whole genome covered multiple times in which case the unque kmer count wouldn't go up anymore but the read count will. So if the cov is 1, then there is no point.

*Thread Reply:* Fortunately, we are not used to work with utopic data 😂 but yes you are right 👍

*Thread Reply:* I see. But in that case, would it make sense to give more emphasis to the coverage ?

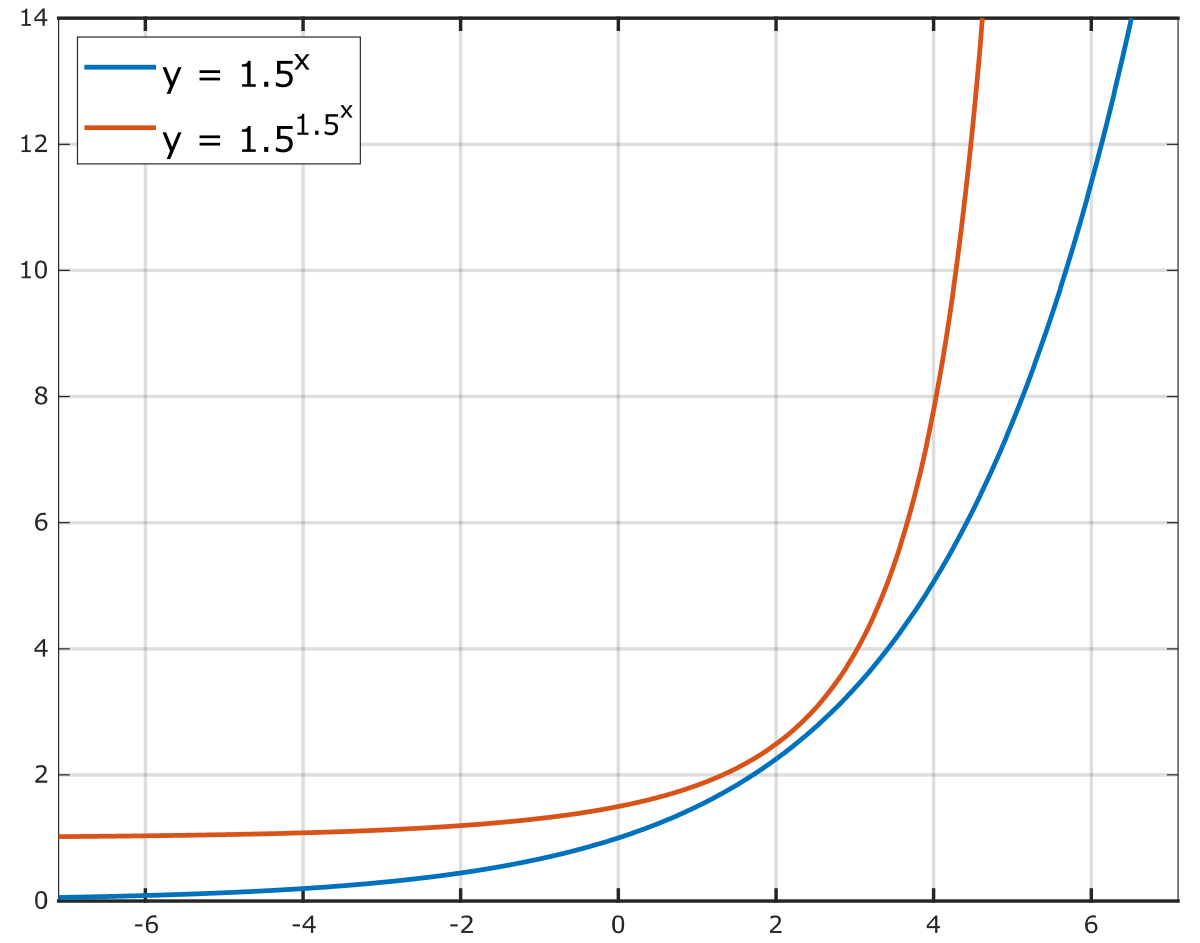

Maybe something like E = K/R ** double_exp(C)

This way for:

• low coverage, high duplication: K<<R so K/R -> 0, C~=0 so dodouble_exp(C) ~ 1, then E->0

• low coverage, low duplication: K/R -> ~(read_len - kmer_len), double_exp(C) ~ 1 , E->~(read_len-kmer_len) because you have up to read_len - kmer_len kmers of length kmer_len in a read of length read_len (read_len - kmer_len + 1 to be precise)

• high coverage (up to 1, because KU uses uniq kmer for “coverage” computation), low duplication: K/R -> 1 ,double_exp(C) -> inf , E-> inf

The assumption being that for low coverage, the K/R ratio is what matters, for high coverage, the coverage is what matters

*Thread Reply:* Sounds good! I am/was lacking the mathematical background to go deeper into the rabbit hole I am afraid so happy to see this improved. I will have a look 🙂

*Thread Reply:* Interesting suggestion @Maxime Borry, but why to collapse them into a single variable, why not to filter with respect to each of them separately? The thing is that 2 out of the three variables seem to be orthogonal / independent. Then constructing a single variable out of two orthogonal might reduce the quality of filtering

*Thread Reply:* I mean to filter in 3-dimensional space is probably more intuitive than in 1-dimensional

*Thread Reply:* We can construct different functional combinations out of the 3 KU stats, with different asymptotics, but do we really need to have one single threshold for filtering?

*Thread Reply:* For example the way GATK filters good from poor quality variants, it uses 17 QC metrics and implements different thresholds for each metric. In our case, for example, we can compute distributions of kmers, cov, reads, taxReads and dup and exclude organisms in the tails of the distributions

*Thread Reply:* Next, one can e.g. compute a PCA on the kmers, reads, taxReads, cov and dup stats, where data points in the PCA plot are the oganisms, and one could perhaps detect clusters of organisms with good QC metrics and poor QC metrics. That would be a multivariate filtering approach. Fir example, a PC1 could be a linear combination of the 4-5 KU stats that can be used in case one definitely wants just one single threshold. But why not to filter in 4-5 dimensional space? It is not too many dimensions to tackle

*Thread Reply:* Hmm, that’s another interesting approach

*Thread Reply:* Acutally, just realised that it would need a “double exponential” function for the coverage, because KU cov will be a max of 1 (since it’s using unique kmers). https://en.wikipedia.org/wiki/Double_exponential_function

*Thread Reply:* I made a small notebook to compare the effect of adding the double-exp to the coverage